David Porfirio, Ph.D.

Assistant Professor

Computer Science Department

George Mason University

Director:

Autonomous Robot Interaction Laboratory

![]()

About Me

I am an Assistant Professor of Computer Science at George Mason University. My research intersects

At Mason, I direct the Autonomous Robot Interaction Laboratory. I am also a member of the Mason Autonomy and Robotics Center.

Prior to joining Mason, I was a computer scientist at the US Naval Research Laboratory (NRL), and prior to that, a postdoctoral research associate also at NRL. I received my Ph.D. from the University of Wisconsin-Madison in 2022, advised by Drs. Bilge Mutlu and Aws Albarghouthi.

You can find my CV here.

News

Projects

Human-Like LLM Planning

RO-MAN 2025

Evaluates the ability of four different LLMs to produce human-like plans.

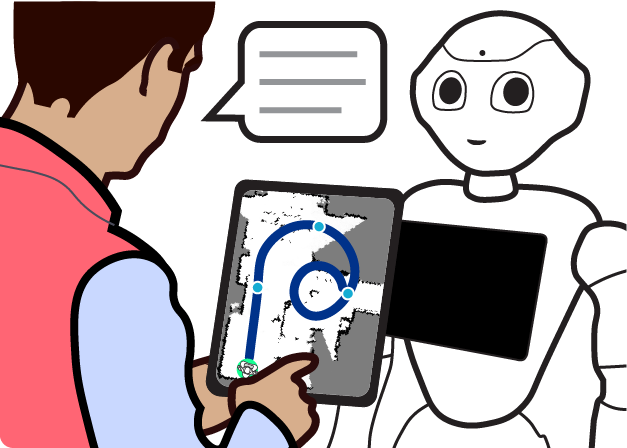

Uncertainty Expression

AAMAS 2025

Three different interfaces for eliciting probability distributions from users.

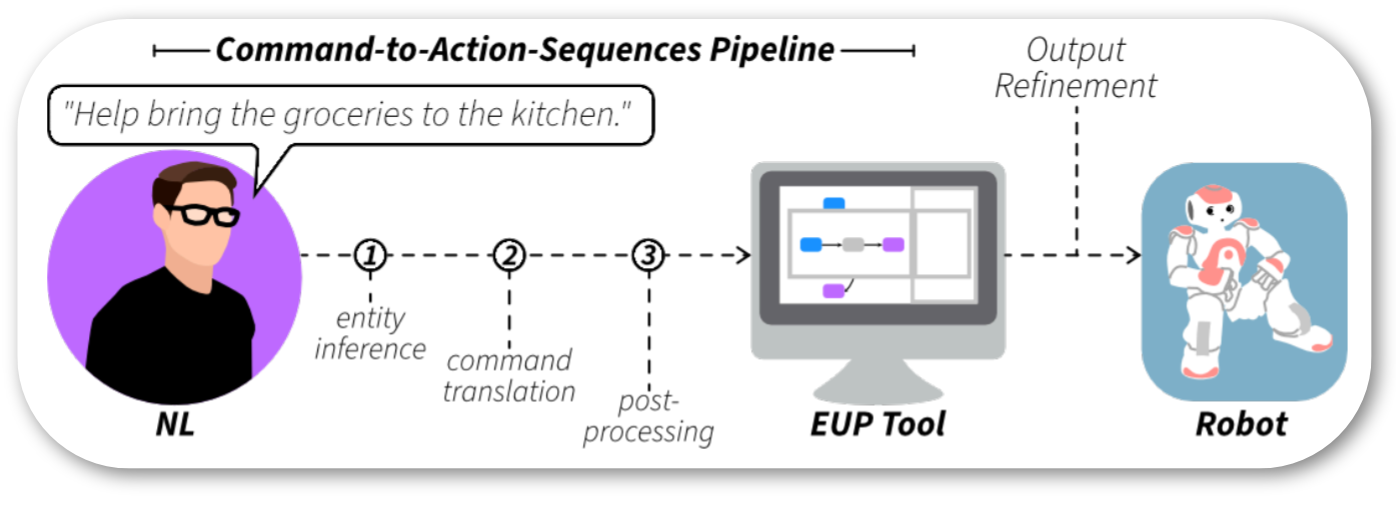

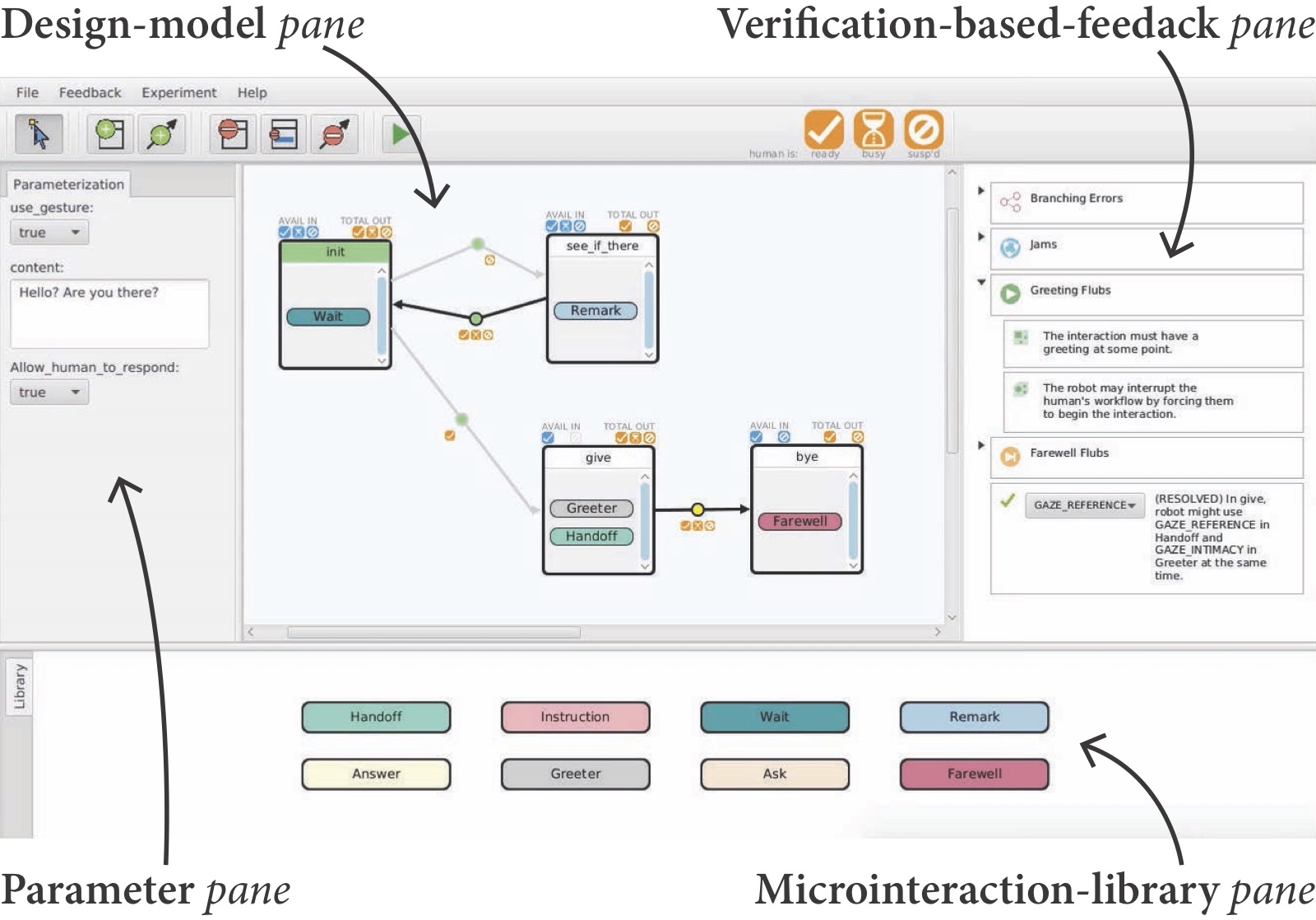

POLARIS

HRI 2024

Robot end-user programming with assistance from AI planning.

INTERACTION SPECIFICATION LANGUAGE

UR-RAD 2023

RO-MAN 2023

AAAI Spring Symposium 2023

Standardizing robot application development via a common, intermediate representation.

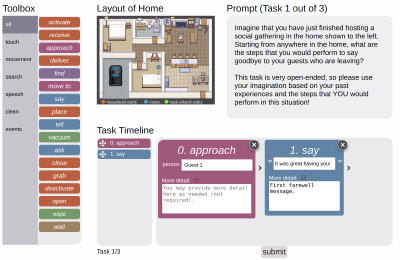

CROWDSOURCING TASK TRACES

HRI 2023 LBR

Collecting examples of step-by-step tasks through an easy-to-use crowdsourcing interface.

This page is modified version of minimal-jekyll (MIT licence). Link icons are from Super Tiny Icons (MIT license).